Introduction

The LXD team would like to announce the release of LXD 5.21.4 LTS!

This is the fourth bugfix release for LXD 5.21 which is supported until June 2029.

Thank you to everyone who contributed to this release!

Highlights

Ubuntu Pro attachment for LXD instances

LXD now supports auto-attachment of Ubuntu Pro to LXD instances. This means that Ubuntu Pro can be automatically enabled inside LXD instances if configured on the host where LXD is running.

This feature can be enabled by configuring the lxd_guest_attach setting in the Ubuntu Pro client.

pro config set lxd_guest_attach={on,available,off}

Documentation: How to configure Ubuntu Pro guest attachment

API extension: ubuntu_pro_guest_attach

SSH key injection via cloud-init

LXD now supports the ability to define public SSH keys in profile and instance config that will be configured inside the guest by cloud-init (if installed).

This feature uses the cloud-init.ssh-keys.<keyname> instance option. The <keyname> is an arbitrary key name, and the value must follow the format <user>:<ssh-public-key>. These keys are merged into the existing cloud-init seed data before being injected into an instance, ensuring no disruption to the current cloud-init configuration.

For example, use the following command to configure a public SSH key for the ubuntu user:

lxc config set cloud-init.ssh-keys.my-key "ubuntu:ssh-ed25519 ..."

It is also possible to use ssh_import_id, e.g. to import from Github:

lxc config set cloud-init.ssh-keys.my-key "ubuntu:gh:<github username>"

Documentation: How to inject SSH keys into instances

API extension: cloud_init_ssh_keys

Storage improvements

Pure storage driver

LXD now supports new storage driver pure, allowing interaction with remote Pure Storage arrays.

Pure Storage is a software-defined storage solution. It offers the consumption of redundant block storage across the network.

LXD supports connecting to Pure Storage storage clusters through two protocols: either iSCSI or NVMe/TCP. In addition, Pure Storage offers copy-on-write snapshots, thin provisioning, and other features. Note that iSCSI requires iscsiadm to be installed on the LXD host.

To use Pure Storage with LXD it requires a Pure Storage API version of at least 2.21, corresponding to a minimum Purity//FA version of 6.4.2.

The following command demonstrates how to create a storage pool named my-pool that connects to a Pure Storage storage array using NVMe/TCP.

lxc storage create my-pool pure \

pure.gateway=https://<pure-storage-address> \

pure.api.token=<pure-storage-api-token> \

pure.mode=nvme

Support for storage driver pure is also included in LXD UI.

Documentation: Pure Storage

API extension: storage_driver_pure

Ceph OSD pool replication size

Introduces new configuration keys; ceph.osd.pool_size, and cephfs.osd_pool_size to be used when adding or updating a ceph or cephfs storage pool to instruct LXD to set the replication size for the underlying OSD pools.

API extension: storage_ceph_osd_pool_size

Container improvements

Unix device ownership inheritance from host

Adds a new ownership.inherit configuration option for unix-hotplug devices. This option controls whether the device inherits ownership (GID and/or UID) from the host. When set to true and GID and/or UID are unset, host ownership is inherited. When set to false , host ownership is not inherited and ownership can be configured by setting gid and uid.

API extension: unix_device_hotplug_ownership_inherit

Unix device subsystem support

Adds a new subsystem configuration option for unix-hotplug devices. This adds support for detecting unix-hotplug devices by subsystem, and can be used in conjunction with productid and vendorid.

API extension: unix_device_hotplug_subsystem_device_option

Container BPF delegation

Added new security.delegate_bpf.* group of options in order to support eBPF delegation using BPF Token mechanism. See Privilege delegation using BPF Token for more information.

API extension: container_bpf_delegation

Virtual machine improvements

Allow attaching VM root volumes as disk devices

LXD now allows attaching virtual machine volumes as disk devices to other instances.

This can be useful for backup/restore and data recovery operations where an application running inside an instance needs to access the root disk of another VM.

In order to prevent concurrent access, security.protection.start must be set on an instance before its root volume can be attached to another virtual-machine.

lxc config set vm1 security.protection.start=true

lxc storage volume attach my-pool virtual-machine/vm1 vm2

Since simultaneous access to storage volumes with content-type: block is considered unsafe, certain limitations apply:

- When

security.protection.startis enabled, the root volume can be attached to only one other instance. This is recommended for interactive use, such as when access to the block device is needed for volume recovery. - Enabling

security.sharedremoves the restriction of how many instances can access the block volume simultaneously. However, it comes with the risk of volume corruption. - When neither

security.protection.startnorsecurity.sharedis enabled, the root volume cannot be attached to another instance.

Documentation: Attach instance root volumes to other instances

API extension: vm_root_volume_attachment

Control the virtiofs I/O thread pool size for VM directory disks

When adding a virtiofs disk device to a VM (one that shares a host directory with the guest) it is now possible to specify the thread pool size that the virtiofsd process (that LXD starts) will use. This can be useful for improving the I/O throughput for directory based shares into a guest when there are multiple concurrent I/O operations.

E.g.

lxc config device add my-vm my-disk \

source=/a/host/directory path=/path/in/guest \

io.threads=64 # Increases from the default of no thread pool.

Documentation: Type: disk - io.threads

API extension: disk_io_threads_virtiofsd

PCI hotplug (from Incus)

Added support for hotplugging pci devices.

E.g.

# Get address of device on LXD host

lspci

...

03:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

...

# Add it to LXD VM

lxc config device add v1 my-device pci address=03:00.1

lxc exec v1 -- lspci

...

06:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

...

API extension: pci_hotplug

Project improvements

Per-network project uplink IP limits

LXD now supports new project level limit configuration keys that restricts the maximum number of uplink IPs allowed from a specific network within a project. These keys define the maximum amount of IPs made available from an uplink network that can be assigned for entities inside a certain project. These entities can be other networks (such as OVN networks), network forwards or load balancers.

lxc project set <project> limits.network.uplink_ips.ipv{4,6}.<network> <max>

# Example:

lxc project set my-project limits.network.uplink_ips.ipv4.lxdbr0 5

Documentation: Reference - Project limits

API extension: projects_limits_uplink_ips

All projects support for additional entities (from Incus)

The LXD API and CLI (via the --all-projects flag) now supports listing images, networks, network acls, network zones, profiles and storage buckets from all projects.

API extensions:

images_all_projectsnetwork_acls_all_projectsnetwork_zones_all_projectsnetworks_all_projectsprofiles_all_projectsstorage_buckets_all_projects

Add nic and root disk devices to default profile when creating new projects

It is now possible to add nic and root disk devices to the default profile of new projects via the --network and --storage flags on lxc project create.

E.g. To create a new project with a default profile that has a nic device connected to the lxdbr0 network and a root disk device using the mypool storage pool:

lxc project create foo --network lxdbr0 --storage mypool

API extension: project_default_network_and_storage

Cluster improvements

Skip restoring instances during cluster member restore

Sometimes after performing cluster member evacuation via lxc cluster evacuate and performing maintenance on a cluster member it may not be desirable or not possible to restore the evacuated instances back to the cluster member that was evacuated.

However one may still want to bring that cluster member back online so that it can be used for new workloads or be a target for evacuees from other members in the future.

To accommodate this the lxc cluster restore command now takes a “skip” value for the --action flag. This restores only the cluster member status without starting local instances or migrating back evacuated instances.

API extension: clustering_restore_skip_mode

Cluster member specific network list

The /1.0/networks endpoint now accepts a target parameter that allows retrieving the unmanaged network interfaces on a particular cluster member.

API extension: network_get_target

Cluster groups usage tracking and deletion protection

A new used_by field has been added to the API response for a cluster group. Deletion of a cluster group is now disallowed if the cluster group is referenced by project configuration (see restricted.cluster.groups).

API extension: clustering_groups_used_by

Authn/authz improvements

Return entitlements when listing entities in the LXD API

When listing entities via the LXD API the results can now optionally be returned with an access_entitlements field that lists the additional entitlements the requesting user has on the entities being listed.

This requires that the current identity is fine-grained and the request to fetch the LXD entities has the with-access-entitlements=<comma_separated_list_of_candidate_entitlements> query parameter present.

This feature is used by the LXD UI to improve the user experience when using fine-grained authorization to restrict user actions.

For example, as a fine-grained identity that is a member of a group that has the “operator” entitlement on the “default” project:

lxc query /1.0/projects/default?with-access-entitlements=can_view,can_edit,can_create_instances

{

"access_entitlements": ["can_view","can_create_instances"],

"config": { ... },

"description": "Default LXD project",

"name": "default",

"used_by": [ ... ]

}

Note that the “can_edit” entitlement was not returned because the user does not have this entitlement on the project (the built-in operator role does not include it).

Additionally when querying /1.0/auth/identities/current the response now contains a new fine_grained field indicating whether the current identity interacting with the LXD API has fine-grained authorization. This means that associated permissions are managed via group membership.

API extension: entities_with_entitlements

Configuration scope information in the metadata API

There is now scope information added for each configuration setting in the GET /1.0/metadata/configuration API endpoint. Options marked with a global scope are applied to all cluster members. Options with a local scope must be set on a per-member basis.

API extension: metadata_configuration_scope

Custom OIDC scopes

LXD now supports an oidc.scopes configuration key, which accepts a space-separated list of OIDC scopes to request from the identity provider. This configuration option can be used to request additional scopes that might be required for retrieving identity provider groups from the identity provider. Additionally, the optional scopes profile and offline_access can be unset via this setting. Note that the openid and email scopes are always required.

API extension: oidc_scopes

Support for OIDC authentication client secret

Added support for the oidc.client.secret configuration key. If set, the LXD server will use this value in the OpenID Connect (OIDC) authorization code flow, which is used by LXD UI.

This allows using OIDC authentication with Identity Providers that require a client secret (such as Google).

Documentation: Server configuration - oidc.client.secret

API extension: oidc_client_secret

Client certificate presence

Similarly it is now possible for a client to identify if it is sending a TLS certificate via the new client_certificate field added to the /1.0 endpoint. This is set to true if client is sending a certificate. This is used by the LXD UI to assist with new certificate generation.

API extension: client_cert_presence

Miscellaneous improvements

Override snapshot profiles on copy option

Added an API request option to specify that a snapshot’s target profile on an instance copy should be inherited from the target instance. This fixes an issue when using the lxc copy --no-profiles flag.

API extension: override_snapshot_profiles_on_copy

Device filesystem UUID in Resources API

Added a new field called device_fs_uuid that contains the respective filesystem UUID of each disk and partition indicating whether or not a filesystem is located on the device.

API extension: resources_device_fs_uuid

Removal of instance devices via PATCH API request

The PATCH /1.0/instances/{name} endpoint now allows removing an instance device by setting its value to null in the devices map.

API extension: device_patch_removal

Support for exporting in new backup metadata format

LXD 6.4 gained a new default metadata format for the instance backup.yaml file.

LXD 5.21.4 keeps the default format as v1 for compatibility with older point releases, however it now optionally supports exporting in the new format as well.

When exporting an instance, the specific version to use can be provided using the --export-version flag:

lxc export v1 --export-version 2

The same applies when exporting a custom storage volume:

lxc storage volume export pool1 vol1 --export-version 2

This allows exporting in the newer version 2 format which allows for more information about attached custom volumes to be included in the backup.

API extension: backup_metadata_version

LXD-UI advancements

Add SSH keys to instances in LXD-UI

When creating an instance in the UI, you can now add SSH keys to it. The keys will be applied by cloud-init on boot. Alternatively you can create a profile with SSH keys and apply it to multiple instances.

Create TLS identity and simpler onboarding experience in LXD-UI

On TLS identity creation, you can apply groups with permissions for the new identity.

The onboarding process was simplified. It now relies on a single client certificate file download to import into the local browser. Trusting the client certificate on the server now relies on trust tokens. This removes the need to download multiple files that can be mixed up and you don’t have to upload to the server in order to get started.

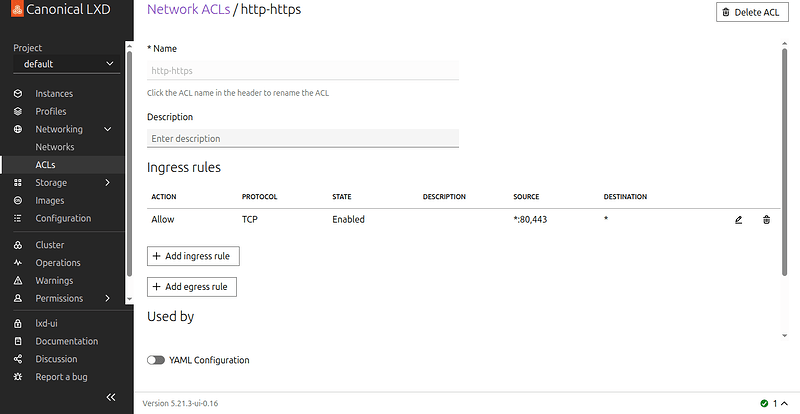

Network ACL support in LXD-UI

You can create and manage Network ACLs directly in the UI. The network overview page shows ACLs applied to the network and allows changing the applied ACLs.

Instances from all projects in LXD-UI

A new page in the UI lists all instances on a LXD server or cluster, from all projects the current user has access to.

Project creation with default network in LXD-UI

On project creation, select a default network for the new default profile in the new project.

Instance export configurable in LXD-UI

Additional options on exporting an instance are now available in the UI.

Disk and CPU metrics for instances in LXD-UI

Memory and root filesystem usage graphs can be enabled as optional columns of the instance list.

Additional disk metrics and CPU usage are now available on the instance detail page.

Improved experience for users with restricted permissions in LXD-UI

Users with restricted permissions will see all available actions in the UI. Interactions that the user has no permission to will be grayed out and a reason is available on hover.

Improved storage on clusters in LXD-UI

Displaying pool usage on a per cluster member basis for storage pools that are local, like the directory or ZFS drivers. For cluster wide storage pools like ceph, there will be a single usage graph.

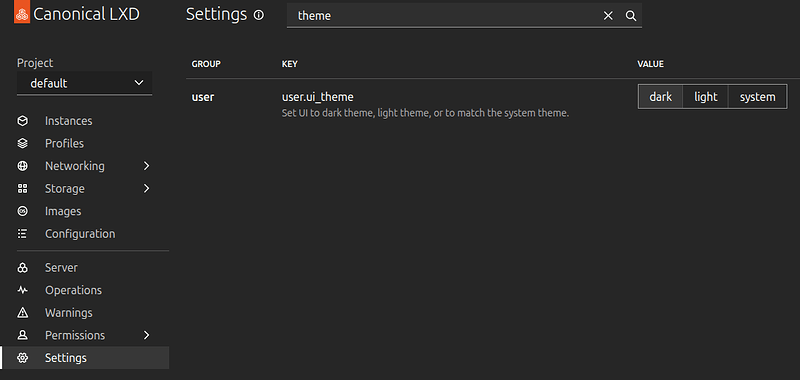

Dark mode for LXD-UI

The UI will adjust the to system theme automatically. Users can force a dark or light mode in the settings.

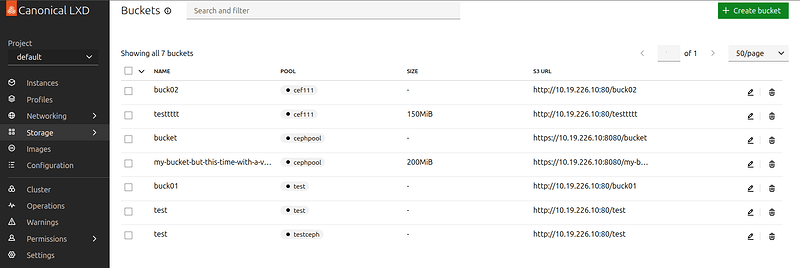

Storage buckets in LXD-UI

- Added support for creating and listing of storage buckets.

- Added support for management of storage bucket keys.

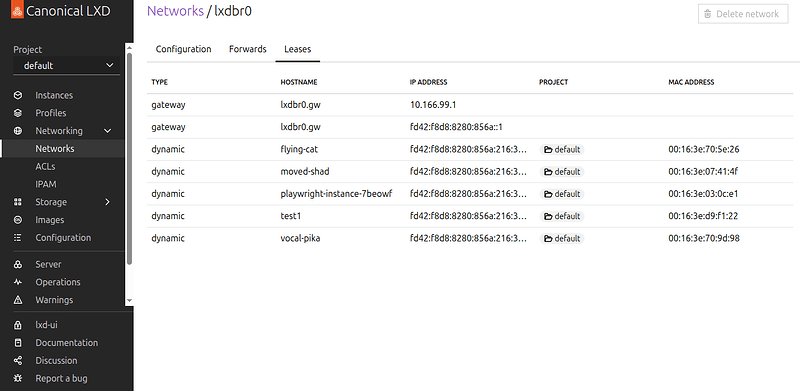

Networking in LXD-UI

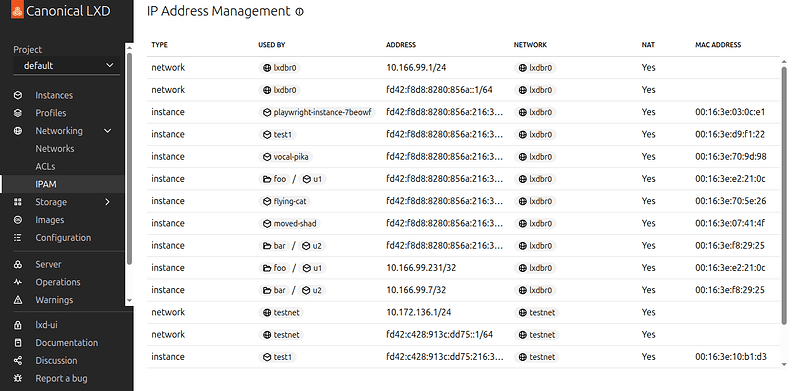

- Added support for management of network types

macvlanandsriov. - Introduced new network details page for displaying active IP leases.

- Introduced new IPAM page under networks, which unifies IP address management.

- Added an option to download ACL logs.

- The network bridge configuration has a new setting for

bridge.external_interfaces. - Added the mac address in the instance detail page and instance list side panel.

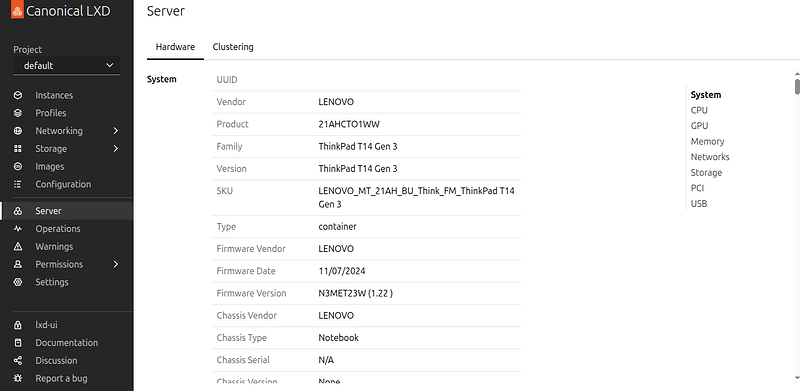

Server hardware in LXD-UI

- Surfacing information about server hardware

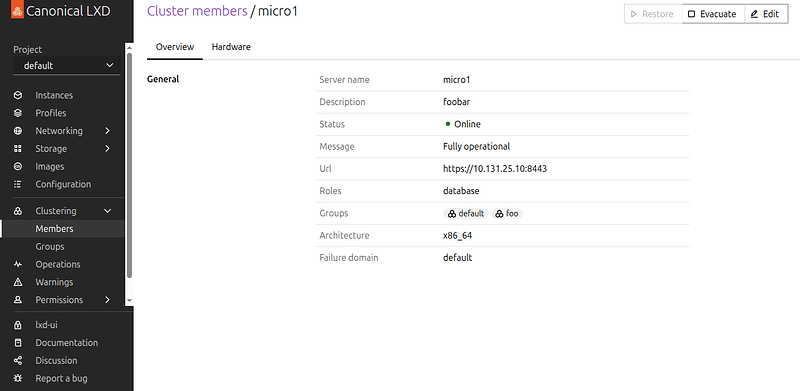

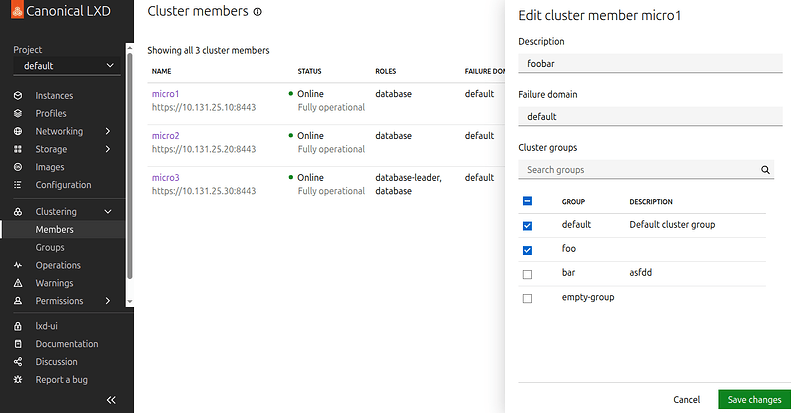

Clustering in LXD-UI

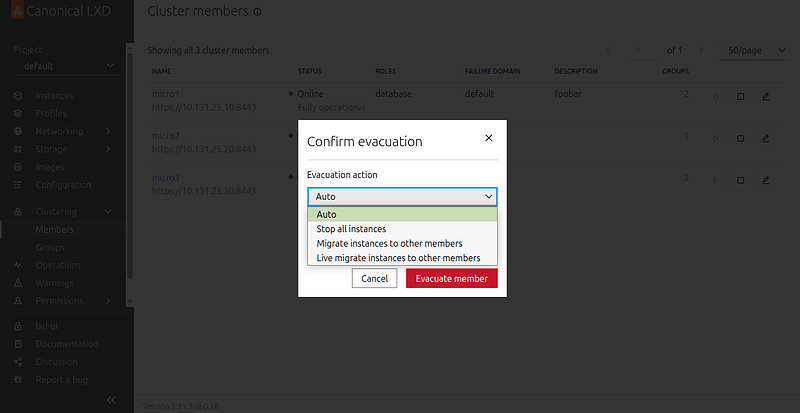

- Added support for configuring cluster member evacuation and restoration modes.

- Additionally, error and success state handling has been improved for both actions.

- New distinct pages for cluster members and groups

- New cluster member detail pages with hardware details

- Edit cluster member details.

Export and import of storage volumes in LXD-UI

- Added LXD UI option to export custom storage volumes, enabling manual backups.

- Added option to import custom storage volumes, enabling restore of backups.

Updated minimum Go version to 1.24.5

The minimum version of Go required to build LXD is now 1.24.5.

Snap packaging dependency updates

- dqlite: Bump to v1.17.2

- go: Bump to 1.24.6

- lxc: Bump to v6.0.4

- lxcfs: Bump to v6.0.4

- lxd-ui: Bump to 0.15.2

- nvidia-container: Bump to v1.17.6

- nvidia-container-toolkit: Bump to v1.17.7

- qemu: Bump to 8.2.2+ds-0ubuntu1.8

- zfs: Bump to zfs-2.3.3, zfs-2.2.8 and zfs-2.1.16

Complete changelog

Here is a complete list of all changes in this release.

Downloads

The release tarballs can be found on our download page.

Binary builds are also available for:

- Linux: snap install lxd

- MacOS: brew install lxc

- Windows: choco install lxc