can you access it from here?

https://web.archive.org/web/20240111122217/https://ubuntuforums.org/showthread.php?t=2446164

LOL, I know how you feel, it took me a full day to get the Gist.

And don’t ask A Developer, your head will explode…LOL

I’ve never used @vidtek99 suggestion. But I could PM you the content on that link if you want in a PM.

@coffeecat Thank You for many many years of Service to the Old UF you will be missed.

@sgt-mike this is how I like my snapshots(Manual)

Snapshots are set to Off

`zpool get listsnapshots

NAME PROPERTY VALUE SOURCE

bpool listsnapshots off default

dozer listsnapshots off default

rpool listsnapshots off default

tank listsnapshots off default

`

Daily Manual snapshots:

zfs list -r -t snapshot -o name,creation

NAME CREATION

bpool@12-31 Tue Dec 31 12:48 2024

bpool/BOOT@12-31 Tue Dec 31 12:48 2024

bpool/BOOT/ubuntu_5u9nfk@12-31 Tue Dec 31 12:48 2024

rpool@12-31 Tue Dec 31 12:48 2024

rpool/ROOT@12-31 Tue Dec 31 12:48 2024

rpool/ROOT/ubuntu_5u9nfk@12-31 Tue Dec 31 12:49 2024

rpool/ROOT/ubuntu_5u9nfk/srv@12-31 Tue Dec 31 12:49 2024

rpool/ROOT/ubuntu_5u9nfk/usr@12-31 Tue Dec 31 12:49 2024

rpool/ROOT/ubuntu_5u9nfk/usr/local@12-31 Tue Dec 31 12:49 2024

rpool/ROOT/ubuntu_5u9nfk/var@12-31 Tue Dec 31 12:49 2024

rpool/ROOT/ubuntu_5u9nfk/var/games@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/lib@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/lib/AccountsService@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/lib/NetworkManager@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/lib/apt@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/lib/dpkg@12-31 Tue Dec 31 12:50 2024

rpool/ROOT/ubuntu_5u9nfk/var/log@12-31 Tue Dec 31 12:51 2024

rpool/ROOT/ubuntu_5u9nfk/var/mail@12-31 Tue Dec 31 12:51 2024

rpool/ROOT/ubuntu_5u9nfk/var/snap@12-31 Tue Dec 31 12:51 2024

rpool/ROOT/ubuntu_5u9nfk/var/spool@12-31 Tue Dec 31 12:51 2024

rpool/ROOT/ubuntu_5u9nfk/var/www@12-31 Tue Dec 31 12:51 2024

rpool/USERDATA12-31@12-31 Tue Dec 31 12:52 2024

rpool/USERDATA12-31/home_aho6ok@12-31 Tue Dec 31 12:52 2024

rpool/USERDATA12-31/root_aho6ok@12-31 Tue Dec 31 12:52 2024

tank@11-1 Fri Nov 1 8:13 2024

tank@12-27 Fri Dec 27 11:15 2024

tank@12-31 Tue Dec 31 12:52 202

Yep even ‘tank’ gets them.

@coffeecat

I don’t think it’s a UF problem. But when every computer in the house (something like 7, not counting my servers) with differing setups time out. I’m left to believe it’s at the ISP or somewhere along the route to the UF server. Not UF . And honestly it’s not what I’m concerned about, as the site will become dormant /read only.

I’m just pointing out I can’t get there to view what some are trying to show.

I have no doubt the site is running fine.

@rubi1200 -Hey that link worked I can seee I can see LOL

@anon36188615

On sanoid I did watch a youtube vid of Jim Salter discussing and demonstrating sanoid .

Which actually was pretty clear. But before going into it I’ll definitely need to read up on it

Yeah my snapshots are set in the same manner. Manual

Now Just for the giggle factor and to see how 1. long it takes, 2. see how much compression.

I have a tar backup (via webmin) running on the smallest directory in the pool that contains media. 1.67TB, not that I plan on using tar but kind of a baseline effect. (it’s at 232gb right now and growing).

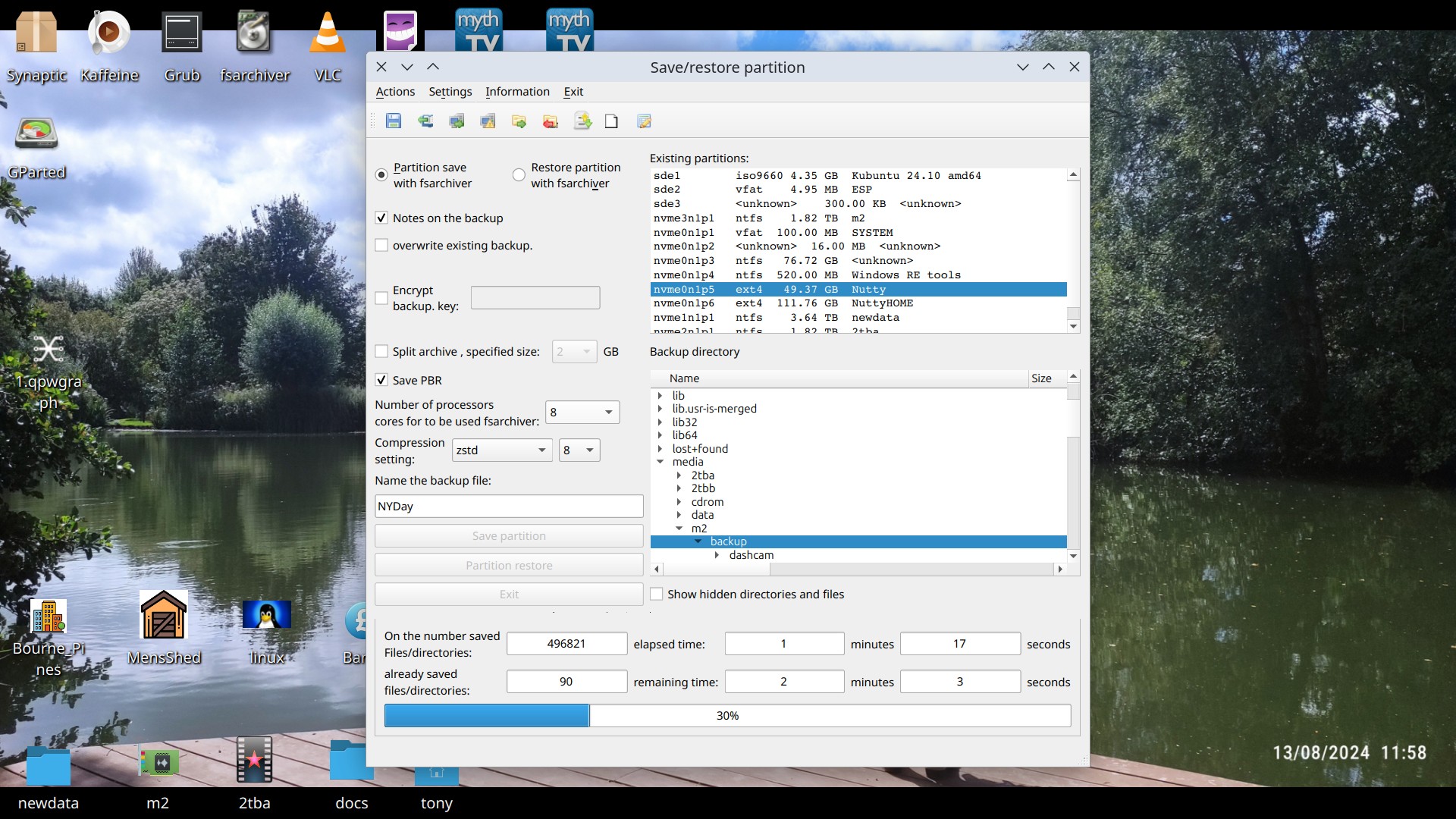

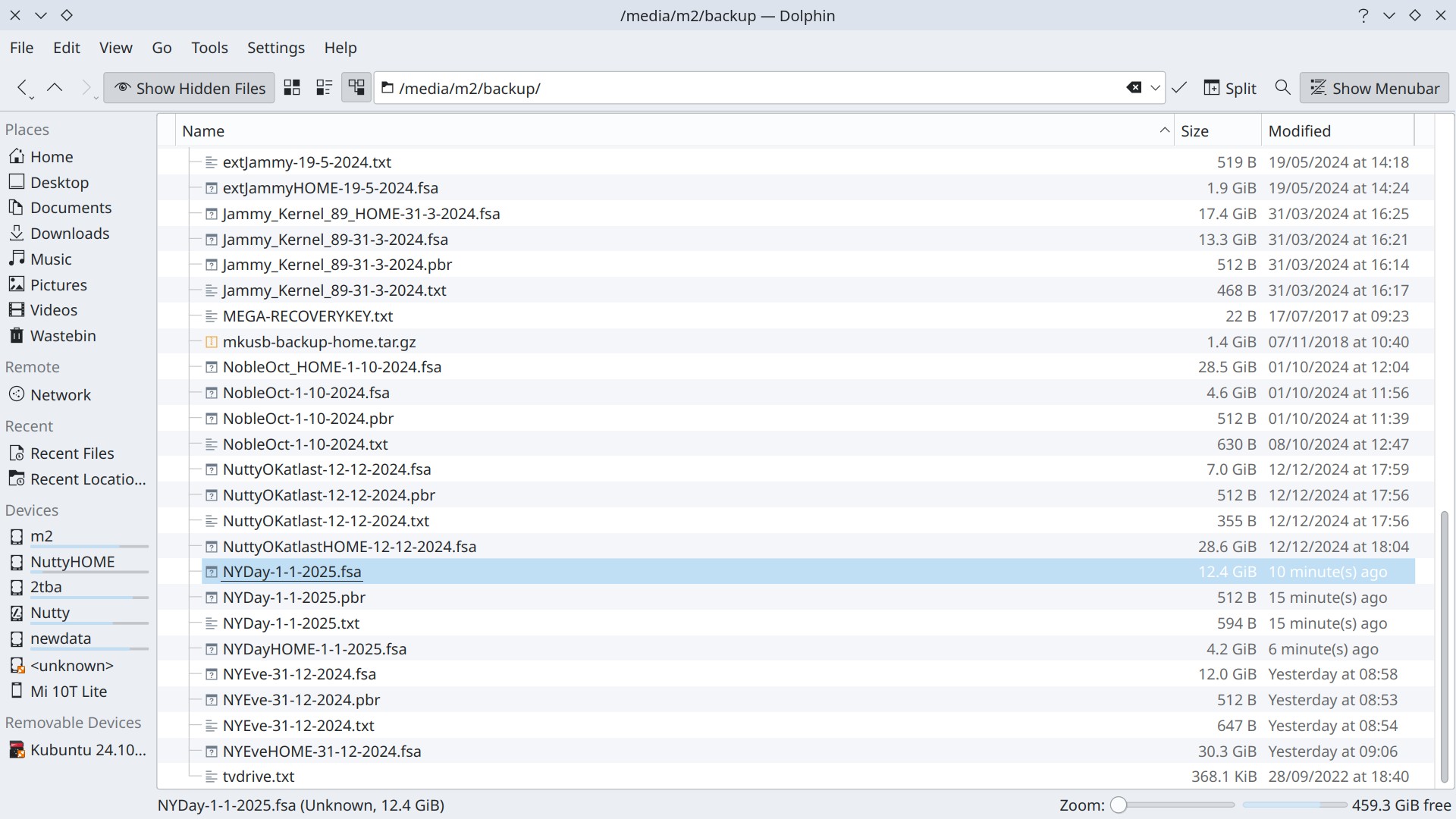

Screenshot of my backup routine using QT-Fsarchiver. As this is a “live” backup, it gives a notification of that before starting. Then you can add notes to the backup in a .txt file that also has the o/s system details:

*to be protected/secured partition: / (root system directory) *

Operating system: DISTRIB_DESCRIPTION=“Ubuntu 24.04.1 LTS”

*Kernel: Linux 6.8.0-35240528-generic x86_64 *

Backup file name: NYDay-1-1-2025.fsa

Partition name: nvme0n1p5

Partition type: ext4

UUID: fa99da28-a515-49b6-91fa-41297aabd0ec

Description: Nutty

Partition size: 48,29 GB

Assignment of the partition: 31,32 GB

Assignment of the partition: 68 %

Compression: zstd level:8

Approximate image file sizes: 12,84 GB

Path of the backup file: /media/m2/backup

Free space on the hard disk to be backed up to: 476GB

Other notes: All ok

Multiple choices of compression, I use ZSTD with a compression factor of 8.

It can also save the PBR to preserve booting system.

It’s a brilliant little programme and I have used it in differing iterations beginning with a cli version only for about ten years. There are no esoteric learning curves of obscure commands, it just works out of the box in a simple straight-forward manner.

Cheers Tony.

@vidtek99

That does look quite stunning and capable. And I will explore it, in addition to some other solutions listed.

Just as a sidebar to the thread/post, an update on the tar attempt and a little bit on my hardware concept. Yes slow was a result of using the tar backup utility , in one aspect but then to be fair 1.7TB of data will take a while no matter the program. Although the full back of just data will almost be 6x times that. Compression was actually none the tar.gz file is the same size as the source. Which now I have a baseline to go off of. I have went back and forth on the medium to put the backup on and concluded that I’ll use spinning rust. But, I only want to spin them when backing up or restoring. Which caused me to look at my shelf of spare /standby LSI HBA’s (one is misbehaving a bit so I’ll attempt a re flash of firmware to see if I can straighten it out, that leaves a LSI 9300-8i on the shelf), and a 4 bay external enclosure (here) to hook up to the Ubuntu /Win 10 Desktop, those drives will be where data is stored . I have the some hardware ordered to accomplish that goal. The thought here is to use a existing system vs a dedicated backup server.

Which leads to a question on LVM which I won’t ask in this thread / post.

Now back to the topic, I haven’t ruled out any proposal this far, and do prefer the gui approach for ease of use, but CLI with a script is just as easy. The checksum that sanoid offers is a strong card in the deck. And as a home lab setup I may very well decide of two methods, which once I redo the hardware side could be done.

As I have said I already have the data on a set of drives which can be read by Win/Ubuntu systems, but in a restore means mounting /umounting etc to copy back.

I do like the solutions everyone has proposed so far.

So Thanks to everyone whom has responded.

------------[edit to add update on hardware]------------

I referenced the two LSI 9300’s I have on the shelf as spares. One is the 16i the other is the 8i. In reality they both use same chip set, etc etc, but the 16i has two additional ports (4) vs the 8i with 2. Background the 16i I ran into problems and lost one controller thus making it a 8i . Which I chose this one as it maybe on it’s last legs. So I installed the 16i into the desktop, then had the thought to get brutal with it, and re-flash bios, efi bios, and firmware. If it’s going to fail lets fail it now was my thought. The end result was a success I gained back the other channel. Which honestly I didn’t expect to happen. Now the personal dilemma return to shelf as spare and use the 8i, or continue on with it. - The great part is now it makes the Backup solution even easier to achieve my desired results. Only posting this part as a FYI for those interested in the hardware side. The main focus is the software solution.

In the testing thus far

Grsync did rather dismal and choked on a large transfer.

But rsync from the command line is working beautifully I used

sudo rsync -azvP mike@server IP:/mediapool1 /backup.

What I do like with the rsync is it tell me exactly how many files to go based on the scan and how many checked, as well as the progress of the current file transfer.

Another option / recommendation was qt-fsarchiver again worked great even in a massive TB transfer. There is quite a bit I liked on this one as well.

Will need to test sanoid / snycoid, rdiff-backup. (hopefully I didn’t miss a recommendation, if I did I’ll review the recommendations and add it).

Great! I have a habit of adding --dry-run at the end, or -n does the same thing. Simulates what will happen before committing. If you like the simulation results then remove the flag. This is particularly important if you ever start using any of the --delete options in rsync which basically will delete from your target any files you deleted from source. The delete flag can wipe a target if you are not careful. -a without --delete will just add to your target. -n is a good habit.

Right now I’ve got rsync running in a just shy of a 7TB write/backup.

Which compared to cp is great.

I know I went on and on about the hardware which I just setup on the desktop.

Which kind of made some think I was using a NAS as well as the NFS.

Basically what I did in lieu of buying a 12TB external drive to plug into the desktop. Is use stuff I have laying around to create a way to have a drive setup to have a little bit more available storage capacity. That can be taken offline and only powered on when needed. Just like a external device. Basically saving $300.00 retail before tax, which is what a 12TB external drive runs. I used LVM for the volume manager and set them up to write linearly and didn’t update fstab so yeah I have to manually mount them. Which I figured LVM would complain the least to the desktop when drives are offline and powered down. (although ZFS in a raid0 might work as well, just had the nagging feeling it would complain)

Just a update:

on the LVM mounting dismounting for the back ups, Thus far the Desktop hasn’t complained one bit. And is working well after all the mounting etc etc as well as power cycling off and on. Keeping fingers crossed.

@vidtek99 I went deeper into the small hard disk PSU, specs I ordered yeah… not going to work but, your PM about your hot swap bay with a power button. Caused me to search for a solution finally found a switch that takes the place of a blank pci slot. That interrupts the power circuit to the hot swap bay (enclosure as I refer to it). So that is on the order list for this upcoming Monday which I’m sure the desktop’s 1200 watt psu can handle 4 more drives lol. I like the idea of a switch better anyway cleans it up a bit. Thanks for the tip.

Here is a PM from @rubi1200 I thought it may interest all readers of this thread.

Hi there, I was intrigued by your post and decided to check out qt-fsarchiver. It works exactly as you described it and I am very impressed by the ease of use, ability to save a live partition, and the size of the compressed backup.

However, I have a few questions if you don’t mind:

- can you set up automated backups either from within the app or perhaps a cronjob?*

- if I have, let’s say, 3 partitions on sda and sda3 controls GRUB for all partitions and then I backup and restore sda2 will the restore mess up my custom GRUB settings from sda3?*

Thanks and regards,

Rubi -

-

I have never tried to set up automated backups with QT-fsarchiver, I like to see what happens and it only takes 3-4 mins so no, I don’t know but I can see no reason why it wouldn’t work as a cron job. There is no option in the app for auto backup (or if there is I haven’t come across it). As Dieter Baum is constantly tweaking and improving it I wouldn’t be surprised to see this option being added.

-

This is a little bit tricky. In the past I have had issues with this, but for the last year or so they seemed to have been ironed out in the latest versions. I have my efi partition set up on nvme0n1p1 (in a sata it would be sda1). For the last couple of years when saving the PBR it has worked flawlessly. However, in previous times I have had issues with not booting even when I saved the PBR. I have had to boot from a live USB linux and chroot into the system and fix it manually or use a boot rescue disk. In all cases the backups have been perfect apart from the boot issues.

I will post this reply in the thread as it may be of interest to others.

Best regards, Tony.

I’ve added a feature request here if anyone would like to add comments or ideas.

Mike - Here is a link to the 3.5" caddies I bought. Caddy

See it’s NLA on that site, here is the blurb Orico has on it: https://www.orico.cc/usmobile/product/detail/id/3645

Yes that is a great SATA solution, and I’m glad you posted it.

And Honestly will serve 70 +% of the viewers and would me if I used just SATA, which in this case is sadly I’m not. The SAS drive and your mention of that caddy did take me to this solution (see the PCI Power switch link below). BUT if I was Just SATA I’d would be all about it.

And the maker /re-seller could have made a SAS/SATA option quite easily, even with that said I like the design. I might have to e-mail them a suggestion about that as with a SAS back plane it could support both type of drives at the SAS II level as well as SATA (6gb/s) easily.

yes I know it shows not in stock as I ordered the last one the seller had. But there are / is more by other sellers for those in my hardware situation, as 1 solution but not the only one.

Some may view these type post as thread drift, but I will beg to differ as the hardware can and will effect the back solutions offered by software.

Added I did find the caddies that vidtek99 referenced on new egg when I was looking for a 2.5" to 3.5" adapter to use in 3.5" caddy/trayless drive bay enclosures

here they are Like I said it is a great option. As one can extend the life of the back up media by not spinning them when your not archiving or restoring … Great tip

OK I’m still trying to get my head wrapped around sanoid and snycoid. But I think I might have part of the gist …

Snycoid should be from the backup device to the target source in a pull fashion.

Saniod is usually from the source to assist in the snapshots.

syncoid -r root@192.168.XX.XX:mediapool1 mediapool1/backup

now that I typed all that with the wheels turning in my head have defectively confused me again LMAO. Back to reading I go.

Just background stuff going on_______________

I did try out LVM to assemble the 4TB drives into a larger volume to accept backups. Yeah LVM didn’t survive the powering down the drives. So I’ve setup a differing way to accomplish the task.

Using ZFS and exporting the pool prior to shutting down the drives power and then importing the pool when pwering the drives back up. Seems to work so far, (really trying hard not to buy a large 14 /16 TB drive to backup to) as I’ve exported the pool. Shutdown the power to the drives, then shut down the desktop completely and come back. Power up the drives, import the pool no errors thus far no complaining from ZFS. Which honestly I figured it would, now to do this for another week of so. To see if it will work, If not — then either setup a dedicated Backup server, or buy a large drive.

LVM allows adding multiple physical volumes to a single volume group, but I prefer to use one physical volume per volume group. There is the risk, if you have one single giant volume group that is comprised of multiple discs, that if one of your discs goes down, then you potentially lose all the data in that VG.

Another idea is to not automount your volumes until you need them. I learned this tip from someone advising not to mount backup volumes until you need to process a backup, then umount those volumes.

But, I think you will like sanoid/syncoid. Syncoid will push or pull, based on how you set up the command. Sanoid handles your snapshotting & Syncoid will replicate your data based on your most recent snapshot. If you run each on a daily cron, then essentially you have versioned backups

Yes and I had them in that configuration manually mount, in a volume. Nothing against LVM, while I did put up a 3 drive physical volume in a single volume group on the NFS . And it is working well thus far. I apparently failed in the Desktop machine trying to replicate what I had done on the NFS.

That being said I know that LVM can be done with redundancy. Which I went for drive space efficiency. Which the burp in LVM is probably a skill set factor deficiency on my end. Which makes it not the best choice out the gate for me, unless the learning curve is overcome quickly.

Thus is why I went back to ZFS, which I do understand, and can be assemble in the exact manner. Also allowing checksums which I really like that feature. Another factor that I like is ZFS is transferable / portable to another system.

As this get closer to the end I’m seeing that a true solution will probably be a mix of what folks have contributed to this topic.

I really like rsync and QT-Fsarchiver is really good as well, and just as capable as rsync, as @vidtek99 points out is definitely a tool to keep in one’s toolbox /kit . But (and this is not negative) seems geared at the whole file-system on the target/source on initial crank up . But is easily setup to capture just the data side. The layout is highly usable.

Now just to get my head / mind wrapped around sanoid/syncoid. Which to be blunt is me mentally making the employment harder than need be. I just have not had the light bulb moment yet when all makes sense.

in my earlier post with synciod and saniod I used very poor descriptions of my understanding.

let’s see if my english actually portrays my understanding.

Syncoid just pulls or pushes a replication of snapshot data sets to a target (local or remote).

Sanoid on the other hand is a basically snapshot manager performed on the host data sets.

(in EVERY instance of explanation VM’s are used SOLEY which causes the confusion on my end. I don’t run VM’s, nor do I care to at the present)

I use LuckyBackup. it is a gui front end for (I believe) rsync. I like it because the backup folder it gives me is just a copy of my Documents folder. It has snapshots (as many as you wants) so that if accidentally delete some files you can go back in time to get the version you want. I backup to a multi terabyte usb disk.

I keep all the files I, and others, have created in a separate disk partition called Shared_Data. Within the partition I have folders for different people (they can’t see each other’s data unless permission is given). I have symbolic links from each user’s /home folder to their folders in Shared_Data. I back up each users data separately. I don’t backup the OS as I can always recreate that. I moved the “setup” files for things like libreoffice to my Data folder so that I merely have to put a symbolic link into

I had to create a script to run LuckyBackup, because if I forgot to plug in the backup disk, it would abort. I also had to set a parameter in LB that went directly to rsync, but I don’t remember what it was. I found one bug: LB does not retain permission on other than the latest snap shot.

@anon36188615

Before I go and install the saniod/sycoid package, I had a thought of how to deploy it BUT what I don’t know. Is if I just wanted to utilize syncoid to pull snapshots do I have to have the packages on both the target /source machine and the host/backup server?

I’ve not seen anything that says it’s actually required on both ends unless it’s in a push configuration in lieu of a pull.

From what I’m seeing synciod is actually the workhorse, and saniod is more tuned to a snapshot manager.

I’m I missing something?

@lachenmaier after you mentioning LuckyBackup I did do a little looking into it. Which I’ll explore a bit more after the Saniod package.

Nope your not missing a thing.

I’ve got another tool if your going to go back to full ZFS root. (No matter though)

Teaser ![]()

┌─ Datasets ─────────────────────────────────────┌─ Snapshots ────────────────────────────────────┐

│dozer │tank@11-1 │

│tank │tank@12-27 │

│zpcachyos │tank@12-31 │

│zpcachyos/ROOT │zpcachyos@1-8-25 │

│zpcachyos/ROOT/cos │zpcachyos/ROOT@1-8-25 │

│zpcachyos/ROOT/cos/home │zpcachyos/ROOT/cos@1-8-25 │

│zpcachyos/ROOT/cos/root │zpcachyos/ROOT/cos/home@1-8-25 │

│zpcachyos/ROOT/cos/varcache │zpcachyos/ROOT/cos/root@1-8-25 │

│zpcachyos/ROOT/cos/varlog │zpcachyos/ROOT/cos/varcache@1-8-25 │

│ │zpcachyos/ROOT/cos/varlog@1-8-25 │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

│ │ │

└────────────────────────────────────────────────└────────────────────────────────────────────────┘

F1 Help F2 Promote F3 ____ F4 ____ F5 Snapshot F6 Rename F7 Create F8 Destroy F9 Get all F